There are many times when one wants to see which process is responsible for network traffic on a endpoint. While there are ways to look at which process has an open socket or whatnot, this doesn’t help with UDP, and it’s often quite useful to simply do a recording then later see which process is responsible for creating a packet.

On Windows this is very easy using Pktmon / netsh trace / Network Monitor to do a capture, but on macOS it’s not that straightforward.

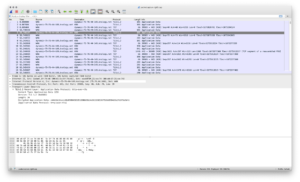

Using tcpdump with the -k argument one can print process name and PID to the console. In this example I’m filtering on just the host 8.8.8.8 (Google Public DNS) then running dig in another window to look up dingleberrypie.com:

c0nsumer@myopia ~ % sudo tcpdump -k -i en0 host 8.8.8.8

Password:

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on en0, link-type EN10MB (Ethernet), capture size 262144 bytes

09:17:58.210959 pid dig.76987 svc BE IP myopia.home.nuxx.net.56248 > dns.google.domain: 10294+ [1au] A? dingleberrypie.com. (47)

09:17:58.292541 IP dns.google.domain > myopia.home.nuxx.net.56248: 10294 1/0/1 A 96.126.107.52 (63)

The bold line is the packet going out to 8.8.8.8, and the pid dig.76987 portion shows that it’s from a dig process, which had process ID 76987.

Unfortunately, due to the non-standard way Apple writes this metadata into files, it’s not viewable in Wireshark. This would be very nice, as it’d make parsing large captures much easier.

For now it seems the best way to do this is to record the capture to a file, then feed it back into tcpdump. Recording this requires using the pktap pseudo interface (see the tcpdump man page about the --interface argument) to ensure this data is saved into the file. The same capture above, writing to a file called out.cap, would be as follows:

sudo tcpdump -i pktap,en0 host 8.8.8.8 -w out.cap

This can then be fed back into tcpdump for parsing/filtering/viewing:

c0nsumer@myopia ~ % tcpdump -k -r out.cap

reading from PCAP-NG file out.cap

09:27:10.964758 (en0, proc dig:77619, svc BE, out, so) IP myopia.home.nuxx.net.52983 > dns.google.domain: 56446+ [1au] A? dingleberrypie.com. (47)

09:27:11.018809 (en0, proc dig:77619, svc BE, in, so) IP dns.google.domain > myopia.home.nuxx.net.52983: 56446 1/0/1 A 96.126.107.52 (63)

c0nsumer@myopia ~ %

In this case it shows both the sending process and the process which received the packet.

When using this for more detailed analysis, I’ll use the macOS tcpdump to grab a very broad capture and then do first-pass filtering before bringing it into Wireshark for more detailed analysis. See the PACKET METADATA FILTER section of the tcpdump man page for details on how to filter on a PID, process name, etc. From the header of this section:

Use packet metadata filter expression to match packets against descriptive information about the packet: interface, process, service type or direction.

Comments closed